10

Apr 12

Website Architecture for SEO: The Complete Guide

If you want to “Bring Back the SEO Cup”, you would do well to emulate the folks who designed and built the “Stars and Stripes” yacht back in 1986 and ensure that your website has a highly optimized design.

There are a number of SEO design principles for website architecture that have been extensively written on in the industry, including “Mapping Keywords to Content”, “Siloing”, “Keeping Your Website Shallow”, “PageRank Sculpting”, and “Internal Anchor Text Sculpting”. These principles are important because although you may not be able to control how much the outside world links to you, how your website is organized is completely under your own control, so you should take full advantage of your options in designing it.

This article will walk through the theoretical basis of each, so you can understand not only how to apply these principles, but *why* you should.

*Shout-out to my father, Dave Ives, who wrote an early Fast Fourier

Transform computer program that ended up being used to help optimize

the hydrodynamic design of the Stars and Stripes ’87 – great work Dad!

Principle 1: Mapping Keywords to Content

“Mapping Keywords to Content” is the principle of making each content page be “about” one keyword. This means the keyword should be (ideally) the most frequent word in the document, and it should be in the title, the URL, and the Meta-Description (H1 and Image Tag as well if you are really into optimization). Just as important however, the page should not be about *another* keyword.

The reason is, if you attempt to target multiple keywords, then what will generally happen is, the document will not be sufficiently about the first term for it to rank for that term; and it won’t be sufficiently about the second term to rank for *that* term either – so instead it will rank poorly for both terms. I walk through the thinking on this in my recent posting “How to Unoptimize Your Content for Maximum Rankings“.

Targeting Multiple Keywords with One Page

The one-keyword-to-one-page principle can be broken or relaxed, but I don’t generally recommend it – if you have several terms that are highly related, and really don’t have the resources to create multiple pages, you can actually group them and sometimes you’ll have fair results. Don’t go crazy though, if you do you’ll end up with what Rand Fishkin calls a “Frankenpage” in his great video “Mapping Keywords to Content for Maximum Impact“.

If you’d like to see a specific example, I also wrote an article where I broke this rule and attempted to get the article itself to rank for multiple terms:

RI SEO Company Case Study for Rhode Island Search Engine Optimization

The results were that the page is currently ranking, as of the writing of this article, #2 for a few of the terms, #4 for a few, and #10-17 or so for the other terms. However, I assure you that if I had instead created eight separate pages and optimized each one for each term, those #10-17 ranking terms would be ranking *much* higher.

Knowing when you can get away with the multiple-keyword-targeting approach is difficult, and it’s also somewhat of an art; in my case I did so because I didn’t want to have eight *really* boring articles on my blog. You’d be hard pressed in most cases, however, to find a writer is proficient at optimizing for multiple terms.; targeting one term is much more systemizable – you can instruct the writer on how to run keyword density analyses and so on. So, on the whole it’s much simpler, to simply target each page at a single keyword, and provides more predictable results.

You’ll Naturally Bring in Long-Tail Traffic When You Write Anyway, Without Trying

It’s important to note that each page will generally also bring in traffic on other keyword variations; by targeting a specific keyword you’re thinking as a writer of “exact match” searching, but you’ll rank for some “phrase match” variations and some “broad match” variations as well. Let’s say you create a page on [blue tennis shoes] – maybe that page will also bring in traffic on the terms “blue tennis shoes cheap” and “blue tennis sneakers”. Maybe it will also bring in traffic on a few unpredictable terms, based on whatever you happened to write. Perhaps on your page for [blue tennis shoes] you mentioned the related keyword [sneaker lace eyelets] and there’s little content on the web on that – you might actually pick up some “long tail” traffic on that term.

My series on Estimating Organic Search Opportunity, which includes a downloadable spreadsheet you can use for organic traffic potential estimation, uses broad match numbers as the basis for its estimates, to take these effects into account.

Your Home Page Can Be Problematic

There is one major exception to this rule that you can’t avoid however: your *home page*! Most businesses aspire to bring in customers on a variety of keywords, and most of their PageRank is coming to their home page, so there is almost always a desire to have the home page rank for multiple terms.

Very little has been written on Home Page Optimization, and I don’t have any systematic advice for you on that topic, but I would encourage you to examine all the anchor text from external links coming in to your home page, think about how much your brand name should be mentioned versus generic terms you’re trying to rank for, then optimize your home page for multiple keywords. Rand Fishkin did a nice piece on multi-keyword optimizing that might be worth reviewing if you’re faced with this task:

http://www.seomoz.org/blog/tactical-seo-how-many-termsphrases-should-i-target-on-a-single-page

Principle 2: Siloing

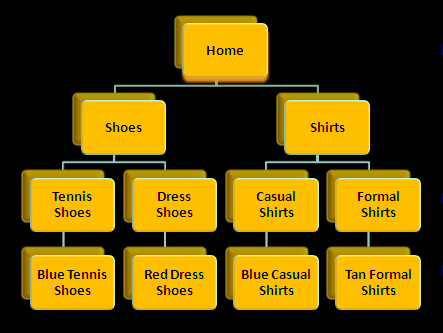

“Siloing” is the principle of organizing a website’s architecture into a hierarchy of meaning, where more generic terms funnel down to more specific terms within a category. Figure 1 shows a “silo” design for a theoretical e-commerce site. You’ll note that in this case, each level is actually a phrase variation of the level above (i.e. the phrase [shirts] gets [formal] added to it at the next level, then [formal shirts] gets [tan] added to it):

There are four reasons to design your website’s architecture in this fashion:

1.) It is intuitive and easy to navigate for users because people tend to think in categories

2.) It is easier to maintain a website that is not a rat’s nest of links

3.) It is easier to perform “PageRank Sculpting” and “Internal Anchor Text Sculpting” for a site organized in this fashion.

4.) You will typically have the website organization reflected in the URLs that are created.

Most of these points are self-explanatory, but in the case of website organization affecting your URLs, let’s look at the example. The [blue casual shirts] page might reasonably have the following address, which just falls out of the way the website is organized:

www.foo.com/shirts/casual-shirts/blue-casual-shirts.htm

In my article “Google’s Secret Ranking Algorithm Exposed“, we saw how having the keyword in the URL is a helpful ranking factor; having both the page named with the keyword, and having the less-specific variations of the keyword appear in the URL, will probably help it to rank somewhat. Also, many websites have a “Breadcrumb” trail, and having the keywords on-page in the Breadcrumb may help as well.

It is important to note however that you should *not* have your website architecture completely drive your URLs. For example, in this case, it is probably better to have the URL for the [blue casual shirts] page be:

www.foo.com/casual-shirts/blue-casual-shirts.htm

That way we’re not saying “shirts” three times (which could actually hurt us, that’s over-optimizing perhaps), and the URL will be shorter (the yearly correlation studies by SEOMoz have consistently shown that URL length is slightly inversely correlated with ranking). The page could still be located in the hierarchy as Figure 1 illustrates it; we’re simply talking about what URL is shown for it.

It is also important to note that you don’t necessarily have to have ever-lengthening phrases in your hierarchy as in this example; we could have a a “Shoe” category and have a “Tennis Sneakers” subcategory for instance – having them be related makes sense but they don’t have to be phrase variations of each other.

So, all things being equal, it makes sense to organize a website into a hierarchy, that goes from the less specific to the more specific, then consider mapping your URLs in such a way that they partially represent the organization of the website, but perhaps not fully.

Bruce Clay has a good case study on Siloing here if you’d like to read more on this principle:

http://www.bruceclay.com/seo/silo.htm

Principle 3: Keeping Your Website Shallow

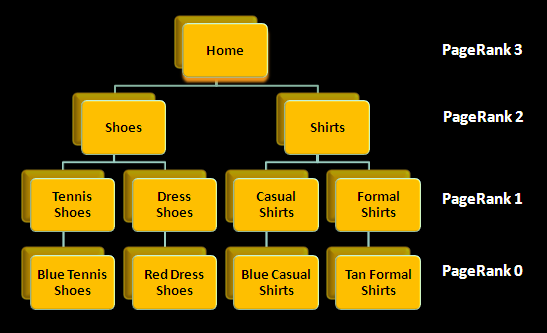

“Keeping Your Website Shallow” is the principle of not having too many levels in your hierarchy. Now, the “Siloing” principle actually conflicts with “Keeping Your Website Shallow”. The reasoning for a shallow hierarchy is a little technical but stay with me here; it has to do with PageRank decaying. Intuitively, a link from a PageRank 5 website will not make your page a PageRank 4 website; this is because PageRank has a built-in decay factor.

For most websites though, a large percentage of links to a website tend to be to its home page. Even if this is not the case, because the Home Page typically has a site-wide link on every page, it will earn a lot of PageRank from internal links (although PageRank conferred by internal links is speculated to be less than PageRank conferred by incoming external links). So, in most cases, the Home Page will have the highest PageRank of any page on your website, and as a result, can be though of as “powering” your site from a PageRank perspective.

Let’s take our example and assume that the Home Page is has a PageRank of 3. Per figure 1 in my article “How to Estimate How Many Links You Need“, any page it links to will likely be a PageRank 1 web page, just from the one link. However, a website differs from the external web, because a website is its own small interconnected world. Hierarchy and leaf pages of the website tend to point at each other, through breadcrumbs, related content links, and the like; so what you will typically observe is that a PageRank 3 home page will tend to have PageRank 2 pages underneath it, followed by PageRank 1 pages, and so on:

We could construct an entire typical website hierarchy, with cross-links, breadcrumbs, and site-wide links, and work to prove this principle, but I have seen this pattern over and over and over on website after website, so you should poke around in the real world and satisfy yourself that this is generally true.

As an example though, a look at the following real-world hierarchy and you’ll get the general idea:

Figure 3 – An Example Hierarchy from Amazon

It’s true that this rule of thumb is not perfect; for instance, you will often see a level drop by a factor of two, probably due to internal linking not being perfectly organized.

On some websites, you might see PageRank being the same at two different levels – for instance, a Home Page of PR5 pointing to a subsection that is also PR5. Typically this is because the Home Page is not a 5.0, it’s really a 5.8 (in other words, a near-6); Google doesn’t report PageRank in any form other than integers. So if all your sub-pages are the same PageRank as your Home Page, it can be a helpful clue in figuring out that your Home Page is actually fairly high in its integer class, and some link building efforts have a shot at moving it up a level.

You may also see that some pages may have more PageRank than the Home Page; if this is the case, it doesn’t mean you have a problem – it means the lower-level page probably is getting some strong external PageRank, and represents an opportunity for some PageRank sculpting, as we’ll discuss later.

Now that you’ve gotten a feel for how PageRank decays, a light bulb should be going off above your head:

The *fewer steps* of decay from the home page, the more PageRank a particular leaf page will have!

THIS is why you should have a fairly shallow website hierarchy – the fewer the levels, the more PageRank the pages at each level will inherit from the Home Page (and each other as well).

The ultimate website design from this standpoint would involve one home page with links to thousands of web pages right on the home page, and a 2-level hierarchy. For practical purposes however, this would be almost unnavigable by users; so most advice you see from SEO Gurus involves making sure you are no more than 3-4 levels deep. The bottom line is, the fewer the levels the better. Don’t be afraid of having upwards of 1,000 links on a web page though, if absolutely necessary – Google used to only crawl a few hundred per page, but for the last few years having up to 1,000 has worked fine. I wouldn’t recommend any more than 1,000 however, because page load time starts to become a significant consideration beyond that size.

Principle 4: PageRank Sculpting

If you’ve been around the SEO industry for awhile, you may be thinking “this guy is talking about PageRank Sculpting – what a moron, he must believe that “nofollow” matters!”. Well, as a matter of fact, I do believe “nofollow” matters, but either way, PageRank Sculpting encompasses much more than that one issue, and is actually performed unconsciously be *everyone* designing a website whether they know it or not.

“PageRank Sculpting” is the principle of figuring out what content has the most potential to rank and bring in traffic and conversions, based on opportunity, and then purposely designing your website so that PageRank will be routed to those pages where it will have the highest return.

If you’re new to SEO, you might be thinking “Really? That sounds a little over the top – people actually go to the effort to do this?” The answer is a resounding “Yes”. Internal links are the *easiest* links to control. If you’re spending a ton of time and resources on outreach but haven’t gone though the effort of thinking about how PageRank flows around your site internally, you can be assured that it is definitely a worthy exercise.

The “nofollow” attribute

“nofollow” is a property of links that was introduced some years back to combat link spam (download any of the myriad of SEO browser plug-ins available from SEOQuake, SEOMoz, SEOBook, etc. if you’d like to be able to have links presented in your browser with strikeout marks identifying which ones are “nofollow” links). The idea was that webmasters would automatically append a “nofollow” attribute in the HTML of links, and then the search engines would ignore those links for PageRank purposes – thus removing the incentive for comment spammers and their ilk.

How to Use the “nofollow” Attribute

It is my believe that by using the “nofollow” attribute , you *can* have your cake and eat it too. You may prefer to starve certain pages from a PageRank perspective, so that other pages can be better fed, or conversely, you may want to route PageRank from a particularly PageRank-laden page to another page with high traffic potential. If you can identify all the internal links pointing at the pages you want to starve, you can “nofollow” all of them, deny the PageRank from “flowing” there, and preserve it for the other pages.

But Hasn’t Google Stated That “nofollow” Doesn’t Matter?

Let’s talk religion for a minute. Since the introduction of this attribute, Google has made several conflicting, frankly waffling statements about whether or not it “respects” the nofollow attribute.

Please don’t bother posting the 4 or 5 Matt Cutts webmaster videos on it below, I’ve seen them all! It’s important to note that in such videos Matt often uses language like “I wouldn’t worry about [insert SEO topic here] much”. The word “much” is a big red flag that I interpret to mean: worry about it a little – i.e. – it absolutely matters! SEO, after all, is about worrying about a myriad of little details, which individually don’t matter much, but can add up to a big win.

Also, it’s important to note that any statement Google made 3 months ago, or two years ago could be completely *wrong* now anyway; Google changes its ranking algorithms all the time.

SEOMoz Data Indicates “nofollow” Matters

SEOMoz’s most recent yearly ranking correlation study (2011 as of the writing of this article) appears to show that “nofollow” and “followed” links are treated *identically* by Google (see “Followed Linking C-Blocks” and “Nofollowed Linking C-Blocks”) here – they have identical correlation values.

http://www.seomoz.org/article/search-ranking-factors#metrics-5

(Side note – Links from a single page can be thought of unique page links, links from two pages on a single domain can be thought of as links from a unique domain, and links from a C-Block have to do with links from a unique range of IP addresses. It’s been pretty well demonstrated that multiple links from a page, a domain, and from a single C-Block of IP addresses are subject to diminishing returns).

Anecdotal Evidence that “nofollow” Matters

I have some additional anecdotal proof that “nofollow” matters in addition to the SEOMoz study. On several of my client’s websites, the “Terms of Use” and “Privacy Policy” pages showed significant PageRank (ranging from PR1 to PR4). This represents a complete waste of internal PageRank. After making sure that any internal links to these pages had “nofollow” added to them, their reported PageRank values were changed by Google to return “N/A” instead of the original PageRank value.

It is my belief that once a web page reaches a level of PageRank, if it is demoted, Google automatically throws it into the “N/A” bucket. I believe the “N/A” PageRank category exists for the following reasons:

1.) To obscure reporting and make it more difficult to do these kinds of tests

2.) So that large companies (especially influential advertisers 😉 will not bother Google with requests to explain why the PageRank of a website or a particular page has dropped.

3.) To obscure manual PageRank penalties.

Side Note: On the third point, often sites have what appears to be sufficient incoming links to reach a particular PageRank level, but Google displays a level severely below that, or zero, or “N/A”. It is my belief that in most of these cases Google has actually applied a special form of manual penalty that reduces the reported PageRank value itself.

For instance, Aaron Wall has previously written about his belief that SEOBook has experienced a penalty along these lines applied in the past; also Google recently admitted that they applied a manual PageRank penalty to its own Chrome Page, so this proves that manual PageRank penalties do exist:

http://searchengineland.com/google-chrome-page-will-have-pagerank-reduced-due-to-sponsored-posts-106551

At any rate, “nofollow”-ing links appeared to change the reported values for the target pages from numeric PageRank numbers to “N/A”, so I take that as encouragement that “nofollow”-ing links works from a PageRank sculpting perspective.

How to *Force* Google to Respect “nofollow”

Either way, there is a simple way to “nofollow” any link in such a way that Google will respect it, whether or not it respects “nofollow” – simply *remove the link*. A link that doesn’t exist can’t pass PageRank! So, PageRank sculpting for a website can at *least* be done from the perspective of deciding what links to include on which pages – and in my experience “nofollow” seems to help – so I recommend acting as if it does.

Summary of PageRank Sculpting Aspects to Consider

PageRank Sculpting can get pretty complicated, and it’s tough to lock down every route through which PageRank might flow to a particular page. If you decide to pursue this approach, consider the following aspects:

1.) What pages are high-potential pages that you want to preferentially route PageRank to?

2.) What pages are low or no-potential pages you want to keep PageRank from leaking to?

3.) Which links on the home page should you have?

4.) Which links on the home page should you “nofollow”?

5.) What should your breadcrumbs look like, and should any of those links will be “nofollow”-ed?

6.) Which site-wide links should be “nofollowed”?

7.) If you have any pages that have very high PageRank besides your home page (perhaps a sub-page or leaf page that has a lot of external links), is it worth placing links to key pages *directly on* that page, just for the purpose of encouraging that PageRank to flow to those pages, even if it requires handling it as an exception?

8.) How will Googlebot discover pages, crawl through your site, and flow PageRank through links?

Principle 5: Internal Anchor Text Sculpting

Internal Anchor Text Sculpting (my term for it) is the principle of obtaining internal links to each page using the targeted kewyord as the anchor text of the links. This is similar to PageRank Sculpting, but where PageRank is numerically oriented, Internal Anchor Text Sculpting is oriented around *relevance*.

It’s been well documented that Google utilizes anchor text in incoming links to figure out what a page (and even a website in general) is “about”. Part of your website architecture should take into account making sure that internal anchor text is consistent and is using the keyword you have targeted for each page. In our example for instance, the page “Blue Tennis Shoes” should have a number of links elsewhere in the site (at least on the parent page, and probably on related pages) that say [Blue Tennis Shoes].

It is though that it is important to make sure that external links coming into a site have a “natural distribution” of anchor text. A “natural distribution” for instance could mean something like, 33% of the links coming in have exact match text, 33% have some broad match related terms, and 33% have the website name or “click here”. Unfortunately, no one in the industry has successfully performed a broad enough analysis to define what those percentages should be ideally.

However, for internal links, it is reasonable to assume that the search engines don’t expect varying anchor text internally; in fact, they probably expect you to consistently refer to a page in a consistent way internally. So it is probably OK to use the targeted keyword as your anchor text, and leave it at that. Anyone with any experience with respect to this, please post a comment below.

In summary, I wouldn’t obsess about “sculpting” anchor text internally per se – just make sure you have references elsewhere on your website for every page, ideally multiple times, with the anchor text you are targeting.

Most people in fact simply refer to this principle as “internal linking”, and the main idea is- don’t forget to do it!

Let Your Content Plan Dictate The Scope of Your Architecture Optimization

When designing the architecture of a website, you should ask yourself “how much content will this website eventually contain?”. If it will only be 25 pages, that’s pretty easy to lay out.

If it will be 200 pages, you might consider a two-level hierarchy (maybe even 2-level drill-down JavaScript menus for a good user experience).

If it will be many thousands of pages, you may need a three or four-level hierarchy, but you might consider rewriting your URLs to appear to be two levels deep, and you might consider doing some sophisticated organizing of the content and “nofollowing” links to various sections.

If you’re a business and you have initial “evergreen” content that you’re going to craft, and then secondary content you want to pump out later, you might consider building in a few generic places on your website where you can place secondary content on an ongoing basis without having to make architecture changes later; a blog section, and a white paper and app notes (or “Resources”) section are great catch-alls for this purpose.

Just remember that in the case of a blog, the latest posting will typically have the most PageRank, then it will roll off of the first page and onto earlier pages, and will lose PageRank over time; if a particular posting is important you may need to “route” PageRank to the archive page it lives on.

The bottom line is, if you are going to have a large website, you would do well to spend a lot of time on your website architecture, and using principles such as Siloing and PageRank Sculpting may be worthwhile. If you are going to have a smaller website, and you don’t have much PageRank to start with, your time might be better spent worrying about how you will do outreach to obtain external links to individual pages of your website, rather than how to optimally route around PageRank that isn’t really there anyway.

Conclusion

There is a lot involved in these five SEO principles for website architecture, and I don’t believe it’s possible to reduce them down to a repeatable checklist – every website has different constraints, goals, CMS limitations, and so on. The important thing is to have an understanding of these principles, to be mindful of them when laying out a website architecture, and when you have a candidate design, ask yourself:

1.) Have I mapped individual keywords to individual content pages?

2.) Have I siloed my content properly?

3.) Is the website hierarchy too deep or just right?

4.) Have I capitalized on any PageRank Sculpting opportunities?

5.) Do I have sufficient internal linking that utilizes the anchor text I want to target for each page?

This is awesome! Right on Ted

Okay – I just have to comment on the PR Siloing and NoFollow.

I agree 100% with the “don’t post videos” view … but, G stated years ago that they stopped counting NoFollowed links when calculating PR. That action nullified PR Siloing efforts using the nofollow attribute.

Are you suggesting that they have reversed that process/treatment?

Google’s statements on this have been conflicting, numerous, and as always, highly qualified.

As a result, I think it’s difficult to ascertain what actions, if any, they ever actually took to change how “nofollow” is handled, and when.

I’m suggesting that using “nofollow”, currently, for PageRank sculpting purposes, works, regardless of what Google has said in the past. My few anecdotal experiences and SEOMoz’s correlation data are enough to convince me.

If anyone has any evidence based on a study, or “clinical evidence” from an actual website that added or subtracted nofollows and saw changes as a result, I’d love to hear it!

As usual, Ted….top notch advice herein….love it and will refer a short list of clients to this post! #Kudos

🙂

Jim

It’s my understanding that to counter page sculpting Google made it clear at SMX 2009 that adding the nofollow attribute to internal links causes the page rank that would have been passed to just evaporate. I tend to lumber terms and privacy etc on one page and use an anchor tag on each of their separate links to direct the viewer to the right section. Multiple links to the same page are seen as one link to Google so I’m not wasting PR on many low SEO value pages. Would like to see a study on internal no follow, until then I’ll play it safe!

I hear you Mike, that’s my understanding as well…however, my experience, and SEOMoz’s correlation measurements, have made me a non-believer in on-site PageRank “evaporation”, regardless of previous Google statements to the contrary.

Interesting idea using multiple links with anchors, I like that.

No shame in playing it safe!

Very interesting article, I’ve been in business for 20 years providing carpet cleaning, janitorial, water and fire damage restoration. Recently a friend mentioned the importance of optimizing my website if I wanted to increase my visibility and traffic and recommended your site as a way of becoming educated on what’s involved. Even though there were parts that I had to read twice to fully understand you do a great job of explaining things in laymans terms. I will recommend this site to others in my line of business.

I agreed with your points.

The url structure and contents should be good and Yes the Design must look great so that people would like to stay on your site

Thanks for the great series of ” complete guides”! An excellent resource to reference SEO work including some great tips! Good luck in 2013.

Well written article. I’m new to the SEO and web optimization game. What you state sounds good but actually implementing it doesn’t. Looks like I need to read this article several times to fully understand.

Thanks again for the info.

Josh

Hello,

I have recently attended Bruce Clay’s SEO training and he goes in depth into the the Silo theory.

This post certainly compliments what i have learned from Bruce Clay.

Thank you for this post.

Leo

These are also known as “sponsored results” or “sponsored links”

and are generally purchased from Google ‘ (the Google Ad – Words ‘product), or Yahoo.

Finally, you should keep a close eye on traffic that

is coming into your site each week. Internet marketing includes various processes which

help to engage potential customers such as search engine marketing, search engine optimization, banner ads, email marketing and other strategies.

Okay – I just have to comment on the PR Siloing and NoFollow.

I agree 100% with the “don’t post videos” view … but, G stated years ago that they stopped counting NoFollowed links when calculating PR. That action nullified PR Siloing efforts using the nofollow attribute.

Are you suggesting that they have reversed that process/treatment?

Yes, Matt Cutts announced they ignore NoFollow, back around 2009 or so – once. But subsequently SEOMoz (now Moz)’s annual study, I believe it was in 2010 or 2011, found ZERO difference between followed and nofollowed links from a ranking correlation perspective (it was buried in their charts and few noted or commented on this). Either way, you can always silo/route PageRank by deciding whether or not a link is to exist or not…so at least to that extent PR sculpting is certainly still a thing, regardless of which way you believe in nofollow