20

Feb 12

Google Crawling Behavior Exposed: 16 Different Googlebots?

Of the major disciplines in SEO, architecture is perhaps the most important of all. This is because if you don’t take into account Google crawling behavior and Google can’t properly spider your website and discover your content, then it won’t matter if it’s the right content, whether it’s optimized, or whether you have obtained any links to it. It may as well not exist if Google can’t discover it.

You’re probably familiar with Google’s spider program, Googlebot, but there are many other ways that Google visits your website. This posting will attempt to pull them all together, and raises some open questions about Google crawling behavior for the community because some aspects of Google’s numerous spiders are still relatively mysterious.

Google Crawling Behavior Problem: Whitelisting User-Agents

A client of mine had some issues recently with Google Instant Preview; they had been whitelisting browsers based on user-agent detection, and I was surprised to see that at some point, Google’s bot that grabs the preview of their web pages must have triggered the whitelist.

Their SERP preview images clearly showed a message in the image “Browser not supported”; this was the message displayed by their website, which had to have been triggered by Google’s bot. Even worse, Google Instant Preview was highlighting this large message as the key text on the page. This lends some credence, in my opinion, to Joshua Giardino’s theory that Google is using Chrome as a rendering front-end to their spiders – and it could simply be that for a short time, the Instant Preview bot’s user-agent was reporting something that was not on my client’s whitelist.

What is This Google Instant Preview Crawler Anyway?

Digging into this, I found that Google Instant Preview (although according to Google it is essentially Googlebot) reports a different user-agent, and in addition to running automatically, it appears to update (or schedule an update perhaps) on demand when users request previews. I knew Google had a separate crawler for Google News and a few other things, but had never really thought much about Google crawling behavior as it relates to Instant Preview. To me, if something is reporting a separate user-agent and is behaving differently, then it merits classification as a separate spider. In fact, Google could easily just have one massive program with numerous if-then statements at the start and technically call it one crawler, but the essential functions would still be somewhat different.

This got me wondering…just how many ways does Google crawl your website?

“How do I crawl thee? Let me count the ways…”

It turns out – quite a few. Google lists around 8 or 9 in a few places itself, but I was able to identify *16* different ways Google could potentially grab content from your website, with a variety of different user agents and behaviors.

Arguably the single page fetch ones are technically not complete “crawlers” or “spiders” but they do register in your web server log with a user-agent or referrer string, they pull data from your website, and in my opinion can be considered at least partially human-powered crawlers, since humans are initiating the fetch on demand.

Take “Fetch as Googlebot” for instance – even though it returns the same user-agent as Googlebot, it appears to be a different program, by Google’s own admission:

“While the tool reflects what Googlebot sees, there may be some differences. For example, Fetch as Googlebot does not follow redirects”

One could argue that Google translate is more of a proxy than a spider, but Google is fetching your web page – who knows what it is doing with it? Google clearly states that the translated content will not be indexed, but that doesn’t mean the retrieved web page isn’t perhaps utilized in some way – maybe even indexed again in Google’s main index, faster than it would have been.

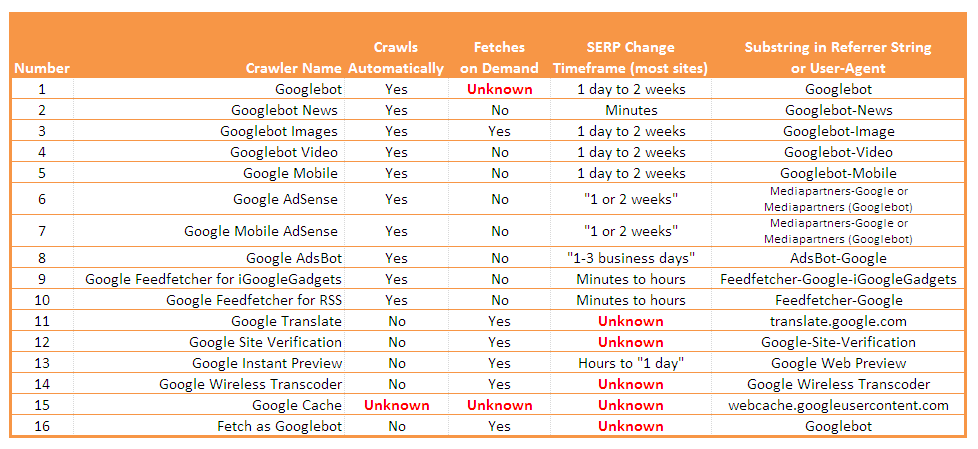

Table 1 details what I found. For “SERP Change Timeframe”, items in quotes are from various Google statements and others are rough estimates from my own experience:

The links referencing background information on each of these is below in Table 2, in case you’re interested in drilling into any particular area of Google crawling behavior. It’s important to note that Video, Mobile, News, and Images all have their own sitemap formats. If you have significant amounts of content in these areas (i.e. on the order of thousands of images or hundreds of videos) it’s advisable to consider creating separate sitemaps to disclose that content to Google’s crawlers in the most efficient way possible, rather than relying on having them discover content on their own:

Table 2 – Background Information

Some Interesting Learnings I Took Away From This Research

1.) You Can Easily Provide Your Website in Other Languages

Totally incidental to this posting but *really* neat – in researching the Google Translate behavior, I discovered that Google Translate can provide you widget code to put on your blog – look at the rightmost part of the TOP-NAV menu – Coconut Headphones is now available in other languages! You can get the code snippet for this here if you ‘d like to do the same:

http://translate.google.com/translate_tools

2.) Whitelisting User-Agents is Probably a *Bad* Idea

One takeaway is that, if you think about it, whitelisting is a *very* bad idea, although it’s easy to see why people are oftent tempted to do so. One might use whitelists with the intent of excluding old versions of IE, or perhaps a few difficult mobile clients. One could also easily envision large company QA groups arbitrarily imposing whitelists based on their testing schedule (i.e. for CYA purposes – since it’s impossible to truly test them all browsers).

But I think it’s *far* better to blacklist user-agents than to whitelist them, simply because it’s impossible to predict what Google will use for user-agent strings in the future. Google could change its user-agent strings or crawling behavior at any time, or come out with a new type of crawler, and you wouldn”t want to be left behind when that happens.

It’s important to “future-proof” your website against Google changes – algorithmic and otherwise, so I think on the whole blacklisting is a wiser approach.

Some Interesting Questions Still Unanswered

1.) Is it possible to “game” Google using this information?

…by using the on-demand services to get indexed more quickly? I have seen *many* SEO bloggers talking about how fetch as Googlebot will speed your indexing, but I have found no evidence of anyone who actually performed an A/B test on it with two new sites or pages. What about instant preview, Google Cache, and Sumbit a URL? Does submitting a single URL to Google result in any speedier indexing, or is it only helpful for alerting Google to new websites?

Note: A few days after this article was originally published, Matt Cutts announced new features in “Fetch As Googlebot”; you’re now allowed to instruct Google bot to more quickly index up to 50 individual pages a week, and 10 pages & all the pages they point to per month. So it appears that rather than having people figure out how to “game” the system, Google has given us a direct line into the indexing functionality. Whether any of the other methods listed above have any affect still remains to be seen.

See video here:

http://www.searchenginejournal.com/googles-matt-cutts-explains-fetch-as-googlebot-in-submit-to-index-via-google-webmaster-tools/40452/

2.) How are cached pages retrieved?

…is it similar to Instant Image Previews, where in some cases it’s automatically retrieved based on a crawl, but sometimes it’s initiated on demand when users click on a Cache entry? Is it just Googlebot itself or something else? There is little to nothing on the web or on Google’s support pages about this, other than people noting or complaining about the listed referrer string in their web server logs, and Google doesn’t seem to acknowledge much about this at all, from what I could find.

You’d think that Google’s function of essentially copying the entire web (1 trillion+ documents) and storing them for display from their own website would be a little better documented, or at least, would have been investigated by the SEO community! (I’m hoping I’ve missed something on this one – straighten me out on this point if you know anything, by all means).

Conclusion

Clearly Google moves, sometimes, in mysterious ways. If you run across any additional ways that Google examines your website let me know and I’ll update the tables above. Also – if you know of any quality research or even if you’ve just done some anecdotal tests of your own any of the issues above regarding Google crawling behavior, by all means – let us know your thoughts below!

Good stuff, Ted. Good timing, too. We recently debugged a weird issue with Adwords instant previews for our client National Geographic and found the culprit was “Google Web Preview.” More here: http://www.rimmkaufman.com/blog/google-preview-user-agent-redirection-curl-command-user-agent/09022012/

Until now, we are the only ones who’ve done any research here. Thank you for adding even more insight here.

Whitelisting is a *GREAT* idea, far from bad. Been doing it since 2006 with no backlash whatsoever. You just need to monitor activity and add to your whitelist now and then which is no different than monitoring activity to add to your blacklist. Blacklists are the bad idea, you can never protect your content or server resources if you wait until after the damage has happened, it’s REACTIVE opposed to whitelisting which is PROACTIVE and stops the problems before they start.

Ah, you’re the CrawlWall guy, I ran across your website recently.

I wonder whether it’s wise though, since Google clearly tries to figure out if you’re presenting different content to users versus different content to Googlebot; I would think it must have a special version of Googlebot that quietly goes in and pretends to be a normal user, to double-check things.

Whitelisting could perhaps risk blocking that (presumably with some sort of penalty resulting). Then again, the Fantomaster guy has been around for a long time, maybe there is something to it…

Feel free to drop a commercial-sounding pitch in a comment here, because I for one certainly don’t “get it” and could probably use some “edumication” on this front.

You could be right, I never claimed to know everything Google does, but I’ve never seen anyone penalized for whitelisting *yet*.

Maybe when 10s of thousands of sites big and small are all whitelisting we’ll find out for sure when push comes to shove what Google truly does because it’ll become more obvious.

However, the way I whitelist is I just whitelist and validate the things claiming to be spiders. Other things coming from Google, and there are proxy services involved, are treated on a case by case basis, browser or crawler, so I’m not treating everything exactly the same which could mean avoiding a penalty by blocking the wrong thing.

Besides, wouldn’t you think if Google were truly checking for bad behavior that they wouldn’t use actual Google IPs would they? I would think Google would check from outside their official network just so it wouldn’t be so obvious.

This is a brilliant post! Very insightful and to be honest if I were Google I would use Chrome as a renderer, it makes complete sense specially if you take their latest “layout” signal.

Thanks Ted, this is a fantastic post. Google often experiments and mixes things up, especially for mobile devices, etc.

You have to think carefully about how you treat user agents and the best word to keep in mind is “test!”

Recently, we have experienced some ptrtey scary crawl errors on our webmaster tools reports. The crawl errors have been fluctuating from 10,000 to 129,000. We feel helpless because most of the errors are attributable to pages and even directories that Google’s bot recognizes even though they do not exist on our server. We contacted our hosting company thinking that we had beenhacked, but they claim otherwise. It’s like some alien spacecraft hasbeen generating pages and directories for our website that only Google’s crawler can see and because these pages do not exist, a crawl error is generated.Another crawl error we have been having is with a page that we removed from our website. It was a dynamic page that accepted a keyword queries. The removed page still receives thousands of hits from “scrapers” with keywords in Japanese and Swedish languages. When a hit is made to a removedpage we get a crawl error.These “scrapers” cloak their IP addresses and our webmaster tools report the sources of these hits as “unavailable,” so we cannot identify and control those hits by any way available to us.In both of these cases, we are helpless to correct these crawl errors, yet Google may be penalizing us for them. We wonder if anyone else has been experiencing similar situations.Thanks much.Mike