18

Jul 11

Google’s Secret Ranking Algorithm Exposed

Last year, some people from the academic community who hadn’t been snatched up yet by Google or Bing did a really interesting study. Rather than simply researching factor correlations to rankings, as SEOMoz does a great job of doing every so often, they used machine learning techniques to create their own search engine, and trained it to reproduce results similar to Google. After the training process, they extracted the ranking factors from their trained engine and published them and presented on them at an industry conference. They were able, for the queries they trained on, to correctly predict 8 of the top 10 Google results roughly 80% of the time. Not bad, considering Google’s algorithms use “over 200 variables”, and the study only examined 17 of them – obviously they chose wisely. I’ve mentioned this in a previous posting, but I think a really thorough runthrough of the study would be informative and interesting.

How the Study was Done

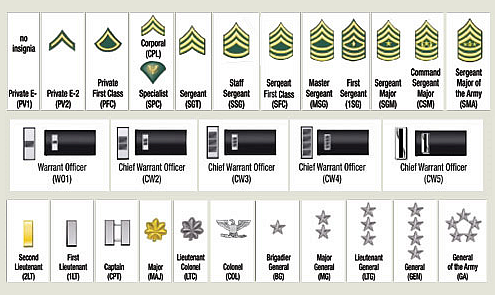

In their paper “How to Improve Your Google Ranking: Myths and Reality“, they detail what the actual weightings were for the various ranking factors. You could essentially take the values in Figure 4B on page 7 (look at the graph on the upper right – examine the line with the x’s which is the third iteration they converged on – read the values off on the left axis), and construct a regression equation with the weights, i.e.

Rank score = .95 x PageRank + .80 x (# of keyword occurences in hostname) + .58 x (# of keyword occurences in meta-description tag) + ……..

If you were to pull all of the factors from the top 200 SERP results for a particular keyword, then apply them to this equation to come up with a score for each result, then sorted them by this score, you’d have a shot at reproducing the correct order for the top 10 SERPs. Doing so would of course be a significant effort, and I am unaware of anyone publishing anything duplicating their results.

This was a revolutionary study, because you can look at the SEOMoz calculated correlation variables all you want, but you can’t really construct a valid regression equation from them, as correlations don’t exactly add (there’s cross-correlation between them, and probably a myriad of other statistical issues with doing so).

Was or is the Study Valid?

There are arguments that this study is inconclusive or only partially useful, since there was a particular set of keywords studied, the study was done pre-Caffeine, and the only off-page factor studied was PageRank. Yes, SEOMoz recently found that the highest correlated factor for ranking was Facebook likes, certainly things have changed since Caffeine and so on. However, the study might also help you to understand how to price your seo services. Plus, think about all of this from Google’s perspective. How much can they really upset the entire apple cart by changing everything? The web has changed in the last couple of years, but I would argue – not a lot – and even if there have been major changes to Google’s algorithms, and certainly there are many unaccounted-for variables, I am of the opinion that things cannot have changed that much.

Either way, examining the results of this study are very instructive and an interesting thought exercise for understanding how and why SEO works the way it does.

The Ranking Factors the Study Confirmed

Below I’ve reproduced each ranking factor listed in the paper, and have eyeballed the values off of the graph for the weightings. What’s interesting is not the exact values, but the ordering and also the very nature of the factors they analyzed:

Bounded vs. Unbounded, Linear vs. Logarithmic

Almost all of these variables have bounds to them. For instance, you can only put the keyword in a title so many times before you “trip a search spam filter”. The age of a domain is ultimately bounded to whenever the domain name system started, and so on. There is one variable that is not bounded – PageRank. It is interesting to note however, that this one is logarithmic – each level requires, on average, 5 times as many links to reach (for more on this, see a previous article I wrote for SearchEngineLand on that topic here).

So, you can get all the PageRank you want, but it’s going to get harder and harder the more you do it, relative to the other variables. This explains why some of the cheapest things you can do (i.e. highest ROI) are to fix your title, meta-description, H1, and so on, and then get a few links to get the page’s PageRank up to a PR2 or PR3 level.

Surprisingly, this study found value in having outbound links on the page with anchor text that includes the keyword. I’ve marked this as “bounded” because again, if you have too many outbound links with targeted anchor text, you’re likely to be identified as search spam.

Incoming Anchor Text

The biggest missed opportunity in this study was not looking at keywords in inbound anchor text. I am postulating in the table that this is unbounded and linear. I think many of us have seen examples of situations in the SERPs where a PageRank 5 page is being outranked by a PageRank 2 page, the difference being something like 800 incoming links with targeted anchor text. My belief is that the weighting of this variable is very low (on the order of .05 -.1), but linear – this would explain why anchor text is the be-all and end-all of SEO – it may have a low weighting, but more just plain helps, and your ability to get it is virtually unlimited. Also you get sort of a double value, in that the anchor text is probably one factor in ranking, and the link itself slightly increases your PageRank factor.

It’s important to note that SEOMoz’s correlation research shows a fairly low correlation of ranking to incoming links with exact anchor text. But if this corresponds to a weighting that is linear and unbounded, then even a weak correlation, when multiplied by a large enough number of links, could make a huge difference in ranking. I am of the opinion that a lot more research into this is warranted.

Other unbounded variables that may be useful to Google for ranking purposes include of course, Tweets, Facebook Likes, and (when enough data accumulates but probably not yet) – Google +1’s.

Other Takeaways

Other interesting takeaways from the study – there is such a thing as over-optimization (i.e. keywords in H4 and H5 tags can actually hurt you slightly), keyword density matters (so get on the cluetrain, anti-keyword-density people!), and keyword-rich domain names are extremely important.

Conclusion

The study of course isn’t valid for specialized portions of Google’s search algorithms such as what order YouTube videos sort, or the Local Search component of Universal Search – many of these use other (of the 200+) factors.

However, the study illustrate a few things about SEO overall. It makes the most sense, from the perspective of the weightings available for each factor, to take care of your easy on-page issues first, then work on building up links (actually, it makes the most sense of all to buy an exact-match domain name first!). This explains why most people in this field typically take care of issues in that order, and explains the natural logical flow of SEO efforts starting with getting architecture right, then optimizing your content, and finally focusing on linking. Essentially SEO, like so many other fields, is all about identifying the work with the highest ROI-to-effort ratio and focusing on that first.

This is an amazing write up. I haven’t checked out the academic paper, book look forward to it.

I’m particularly surprised about what the study says about both domain and page age.

I would have thought domain age was a bigger factor…and for page age to be negatively factor is surprising as well.

@Ted…while I’m still working my way thru the paper, this blog piece is very well done!

As an SEO practitioner I was also more than surprised, that this blog piece plus the paper itself has (as yet anyways) NOT been widely promoted by others…seems like coconutheadphones is a spot that not many come to…cept those of us who really “hunt” for great SEO blogs.

Hat’s off, Ted….great catch here!

🙂

Jim

Great article!

Any chance we’ll get to read your posts on google+ soon?

looks really interesting.weight of some ranking factors seem to be a bit strange, but i will check out at my own pages.

Thanks for sharing this.

It’s really nice information for SEO peoples.

@Lumin – Page age as a negative factor can be balanced out by external link freshness.

Hi and thanks for this really amazin post and paper.

I do appreciate the time and effort you have made with the experiment but have to point out a MAJOR flaw in your experiment.

The keyword sets you have chosen four categories are Linux commands, chemical elements, as well as music and astronomy terms.are all what we as SEOs call “non-commercial” or low competiive words. In other words, people don’t do SEO for it b/c it usually doesn’t have the potential to make big money as is in financial, health or insurance keywords for example.

That being said in this case leaving out the whole “link graph” including anchor text distribution didn’t affect the results probably as it would have in other keyword group categories.

The wrong assumption is also that Google does use “one” weighting for variables – this is the major flaw in SEOoz “ranking factors” as is with this paper. Truth is that based on the theme the ranking factor weighting works differently… heavily linked/SEOed industries work different than low-competition areas where on page factors as listed might make the major difference.

I do however believe this is awesome material to start from and I would love to see this repeated for keywords like “credit cards”, “home insurance” or “digital camera” to name a few high potential keywords.

Best regards

Christoph C. Cemper

CEMPER.COM

Great article Ted and I appreciate the additional insights provided by Christoph.

really good article. That the anchor text is not so important is new to me and I would not expect. I made the experience, that Keyword rich domains are extremely useful, especially if they have no dashes in it.

Correction – the “ANCH” factor (anchor text of links pointing externally) as originally published here had a typo weight of “.95”, it should read “.05”. I’ve corrected it.

Thanks for the great review of the paper. These factors don’t really surprise me, as all this stuff is already mentioned by Google themselves in their SEO-Guidelines (except from facebook likes and twitter) but I am happy to see this confirmed by a third party and that i have done quite a good work with my websites so far.

Thanks vor this Articles.

Greetings

Very interesting article. You know, even if the findings aren’t 100 percent accurate, at least it gives you a fairly good idea of what to concentrate on in SEO.

The timing of this article is amazing, I own a small bookkeeping firm Able Bookkeeping and have been trying for some time to optimize my website http://www.ablebk.com for 1st or 2nd position on Google for the keyword phrase bookkeeping Rogers. Through all of my reading I had come to the conclusion that I would be better off starting over with a new url http://www.bookkeepingrogers.com and putting all of my efforts into that url. I published this new url this morning and hope to have a better ROI like you described in your article. I wish I had read this article 6 months ago. (Great Article)

Great Piece Ted. Working through the white paper now. Fantastic blog all around. Thank you !

Thank you so much for this easy to understand, SEO best practice piece and the link to the paper of which you have adapted. Great blog

Thanks a lot. Just was looking onto the backlinks of a client with Google Ranking <30. Although the client has more and better backlinks he is outranked by a competitor with poor backlinking (top 10), but main keyword combination in the domain name.

Ted, thank you for sharing this great information with us. I think as you have mentioned, Search engines change their algos all the time to confuse se optimizatiors, but if you follow the simple well established, white hat seo methods, you will be fine. Simple optimization, content, links!

Hi, Ted

Thanks for sharing this interesting and thorough study. keep writing good thoughts.

It appears a good study on the surface of it, but the results do raise a few eyebrows. Aside from the keyword density factor (I’m sceptical as I’m a kw-density-naysayer) it lists meta description as a rather significant ranking factor. This is, of course, entirely false. Also the great emphasis placed on PageRank seems to collide with what we know about how (TB)PageRank actually works in Google’s rankings…

Barry, I think you’re right that meta-description is not a direct ranking factor, but I believe that it’s correlated to your CTR relative to your competition, in that a good meta-description increases your CTR. Some (myself included) believe Google is using relative CTR as a ranking factor:

https://coconutheadphones.com/does-google-use-click-through-rate-as-an-organic-ranking-factor-answer-maybe/

Ted, hmmm, yeah, you could be right on that. Meta description – when Google bothers to use the one you’ve supplied instead of something else it thinks is more relevant – is definitely a CTR improvement opportunity, and if indeed the big G uses CTR as a factor (which it does in personalised search – I’m not so sure in unpersonalised results [but then, we see less & less of those]) then yes I suppose you could see meta description as an indirect ranking factor. Still, .50 seems a heavy value to me…. but then that might be consistent with the pervasive personalisation of SERPs.

Henry, I keep seeing comments like yours here, and I’m having a hard time understanding them – how can people state that PageRank, or keyword density (a previous commenter), or (pick your favorite factor) are not ranking factors as such?

No one can, unless they’re either from Google and wrote the algorithm and know, or unless they run a massive correlation study and come up with some kind of evidence one way or the other. The study referenced above is very strong evidence..

True, correlation does not imply causality so we should be wary, but what better evidence can we have than someone reverse engineering SERPs, training a machine learning program using variables pulled from the pages, checking it against test data, then pulling the variables weights out of their machine learning system? The evidence is in the numbers – the study is really strong evidence that PR matters, as is SEOMoz’s yearly correlation studies.

Not trying to slam you in particular Henry, I just keep seeing these types of comments and I don’t understand why people have such a hard time believing numbers out of a pretty scientific study. Is it because of what they’re hearing around the industry? Caveat Emptor in that case. Google even says they still use PageRank, right on their website under “Technology Overview”.

Reminds me of this story!

http://www.biblegateway.com/passage/?search=Luke+16%3A19-31&version=NKJV

A little back and forth with Bill Slawski on this:

http://www.seobythesea.com/2012/01/heading-elements-and-the-folly-of-seo-expert-ranking-lists/#comment-420898

Very interesting perspective – particularly on the usage of anchor text. Obviously low hanging fruit of on site optimization needs to be handled first. Good stuff!

This is a follow up to a post I made in Oct, 2011. The new site I published with the keyword in the url has in fact done very well with only 2 backlinks. I just published another site http://www.eureka–springs.com to not only promote but also check the validity of keyword in url, title, description, and h1. I’ll check back in a couple of months and let you know. Thanks again for this site it is a great resource.

Great post, nice little experiment.

But Christoph is right, the keyword are non-commercial and low competitive. I think you should repeat this experiment for keywords like “buy viagra” or “payday loans”. You’ll get very different results.

The title for this post completely sucked me in when I came across this!!! I thought I’d found something new…..several years late to the party.

These ranking methods are very outdated now.

Very interesting and I completely roger with your PageRank stuff. Thanks for sharing, it make me clear about my thoughts for whole ranking strategy.

Really great article on how Google looks at your site. There are a couple of things of interest that I will have to take note!

Many Thanks

Excellent article. I wonder if Google still follows this with everyone writing about how irrelevant meta tags are now.

Great information, from the list above it seems most of these ranking factors were mainly on page SEO witch gets missed out on a lot of websites even in 2014. Thanks for sharing

good article, iv worked on many peoples websites and the thing that astounds me the most is just how much people focus on links and forget all about there on page SEO

cheers